Dear folks, ByteDance has once again made a remarkable move in the AI realm! Collaborating with research teams from multiple universities, it has integrated the advanced vision - language model LLaVA and the segmentation model SAM - 2, unveiling an amazing new model, Sa2VA! 🎉

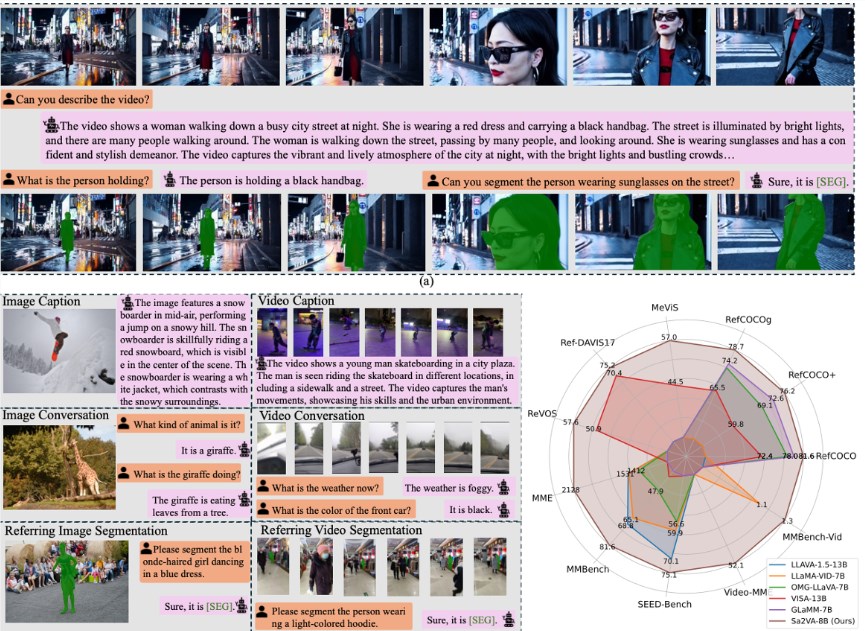

LLaVA is an open - source vision - language model that excels in macroscopic video narration and content comprehension, yet it struggles a bit with detailed instructions. SAM - 2, on the contrary, is an outstanding image segmentation expert capable of identifying and segmenting objects within images, but it lacks language - understanding capabilities. To leverage their respective strengths, Sa2VA effectively combines these two models through a simple and efficient "code - word" system. 🧐

The architecture of Sa2VA resembles a dual - core processor. One core is tasked with language understanding and dialogue, while the other is responsible for video segmentation and tracking. When a user enters an instruction, Sa2VA generates a specific instruction token and passes it to SAM - 2 for concrete segmentation operations. In this manner, the two modules function in their areas of expertise and can also engage in effective feedback - based learning, constantly enhancing the overall performance. 😎

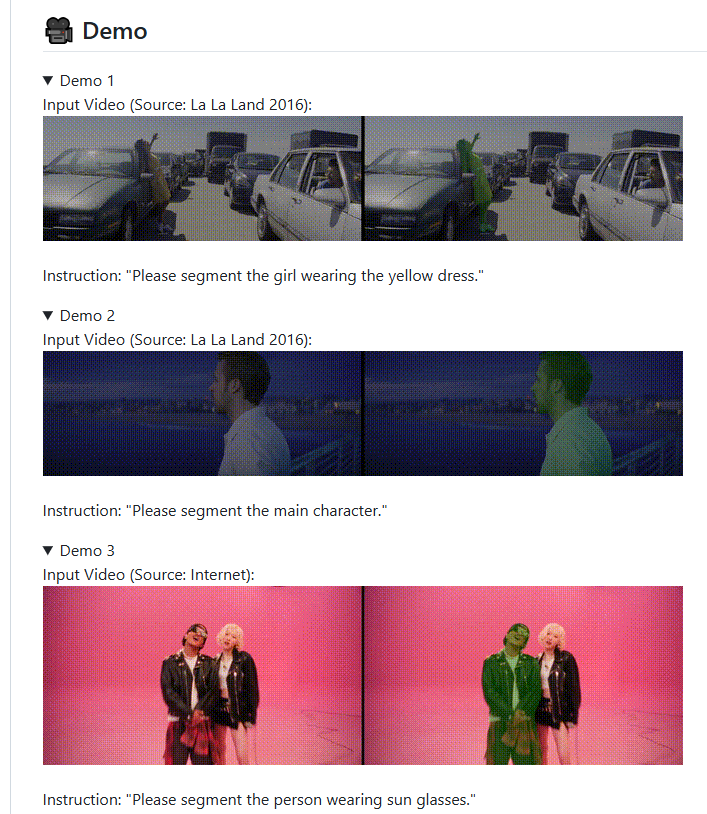

The research team has also designed a multi - task joint training curriculum for Sa2VA to boost its capabilities in image and video understanding. In numerous public tests, Sa2VA has demonstrated excellent performance, particularly shining in the video referential - expression segmentation task. It can accurately segment in complex real - world scenarios and can even track target objects in real - time within videos, boasting extremely strong dynamic - processing capabilities. 👏

Moreover, ByteDance has made various versions of Sa2VA and its training tools publicly available, encouraging developers to conduct research and applications. This provides abundant resources for researchers and developers in the AI field, propelling the development of multimodal AI technology.

Here are the project addresses:

https://lxtgh.github.io/project/sa2va/

https://github.com/bytedance/Sa2VA

Dear friends, are you looking forward to Sa2VA? Come and share your thoughts in the comment section! 🧐

#ByteDance #Sa2VA #Multimodal Intelligent Segmentation #LLaVA #SAM-2 #AI Model #Open-source

%s에 답글 남기기