🤖 Claude Code CLI

Claude Code CLI is launched by Anthropic. Based on its large Claude models (such as Opus 4, Sonnet 4), it is a command - line intelligent programming assistant that emphasizes strong reasoning ability and in - depth code understanding.

Advantages:

- In - depth Code Understanding and Complex Task Handling: Claude Code can deeply understand the structure of code libraries and complex logical relationships. It supports a context window of hundreds of thousands of tokens, enabling efficient multi - file linkage operations and cross - file context understanding. It is particularly good at handling medium - to - large - scale projects.

- Sub - agent Architecture and Powerful Toolset: It supports the sub - agent architecture, which can intelligently split complex tasks into multiple subtasks for parallel processing, achieving multi - agent - like collaboration. The built - in toolset is rich and professional, including more refined file operations (such as MultiEdit for batch modification), efficient file retrieval (Grep tool), task management and planning (TodoWrite/Read, Task sub - agent), and profound Git/GitHub integration capabilities, such as understanding PRs, code review, and handling comments.

- Integration with Enterprise - level Toolchains: Claude Code can not only be seamlessly integrated with IDEs, directly showing code changes in the IDE's difference view, but also be integrated into the CI/CD process in the form of GitHub Actions. It allows @claude in the comments of PRs or Issues to automatically analyze code or fix errors.

- Fine - grained Permission Control and Security: It provides a very complete and fine - grained permission control mechanism, allowing users to precisely control the permissions of each tool through configuration files or command - line parameters. For example, it can allow or prohibit a certain Bash command, limit the read - write range of files, and set different permission modes (such as the plan mode which is read - only and not writable). In an enterprise environment, system administrators can also enforce security policies that users cannot override.

Disadvantages:

- It is a commercial paid product with relatively high subscription fees.

- Its image recognition ability is relatively weak: When dealing with the understanding and analysis of interface screenshots and the task of converting design drafts into code, its accuracy and restoration degree may be inferior to some competitors.

Scope of Capabilities:

Claude Code CLI is very suitable for medium - to - large - scale project development, code libraries that need long - term maintenance, and scenarios where high code quality is required, and AI assistance is needed for in - depth debugging, refactoring, or optimization. It is relatively mature in terms of enterprise - level security, functional integrity, and ecosystem.

Usage:

It is usually installed globally via npm: npm install -g @anthropic - ai/claude - code. After installation, run claude login to go through the OAuth authentication process. The first time it runs, it will guide you through account authorization and theme selection. After completion, you can enter the interactive mode. Users can command the AI to complete code generation, debugging, refactoring, etc. through natural language instructions.

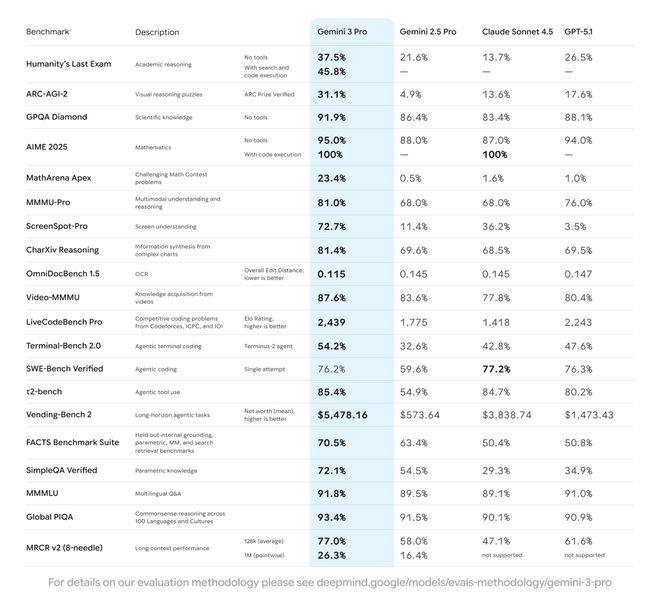

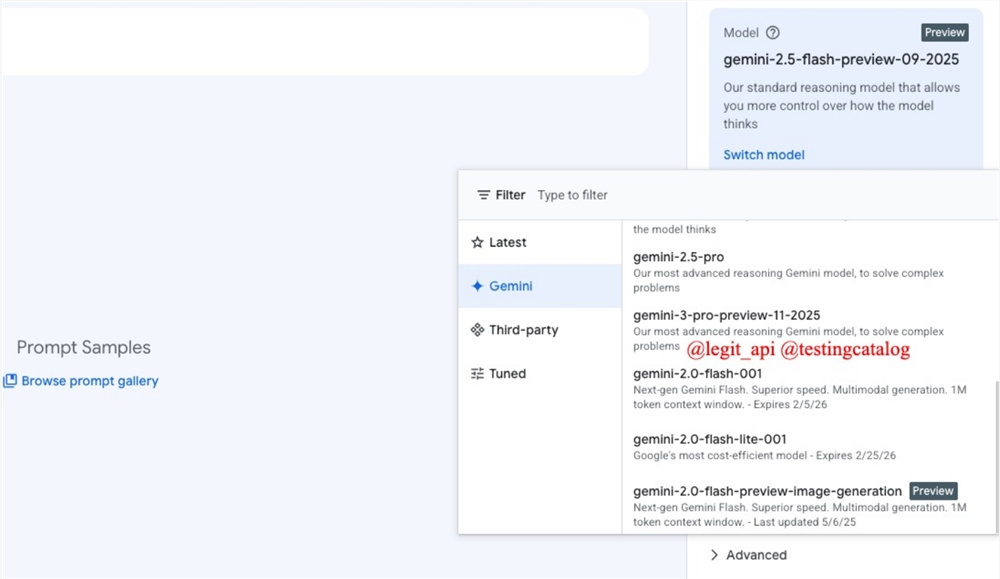

🔮 Gemini CLI

Gemini CLI is an open - source command - line AI tool by Google. Based on the powerful Gemini 2.5 Pro model, it aims to turn the terminal into an active development partner.

Advantages:

- Free and Open - source with Generous Quota: It is open - source under the Apache 2.0 license, with high transparency. Personal Google account users can enjoy a free quota of 60 requests per minute and 1000 requests per day, which is highly competitive among similar tools.

- Ultra - long Context Support: It supports a context window of up to 1 million tokens, easily handling large - scale code libraries, and can even read an entire project at once, which is very suitable for large - scale projects.

- Terminal - native and Powerful Agent Capability: Designed specifically for the command - line interface, it minimizes developers' context switching. It adopts the "Think - Act" (ReAct) loop mechanism, combined with built - in tools (such as file operations, shell commands) and the Model Context Protocol (MCP) server, to complete complex tasks such as fixing errors and creating new functions.

- High Scalability: Through the MCP server, bundled extensions, and the GEMINI.md file for custom prompts and instructions, it has a high degree of customizability.

Disadvantages:

- The accuracy of instruction execution and intention understanding is sometimes not as good as Claude Code, with slightly inferior performance.

- There are potential data security risks in the free version. User data may be used for model training, making it unsuitable for handling sensitive or proprietary code.

- The output quality may fluctuate. User feedback shows that Gemini - 2.5 - pro sometimes automatically downgrades to the less powerful Gemini - 2.5 - flash model, resulting in a decline in output quality.

- Its integration with the enterprise - level development environment is relatively weak, and it is more positioned as an independent terminal tool.

Scope of Capabilities:

Gemini CLI, with its large context window and free features, is very suitable for individual developers, rapid prototyping, and exploratory programming tasks. It is suitable for handling large code libraries but is relatively weak in complex logic understanding and deep integration with enterprise - level toolchains.

Usage:

Install via npm: npm install -g @google/gemini - cli. After installation, run the gemini command. The first time it runs, it will guide users to authorize their Google accounts or configure the Gemini API Key (by setting the environment variable export GEMINI_API_KEY = "your API Key").

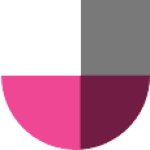

🌐 Qwen Code CLI

Qwen Code CLI is a command - line tool developed and optimized by Alibaba based on Gemini CLI, specifically designed to unleash the potential of its Qwen3 - Coder model in agent - based programming tasks.

Advantages:

- Deep Optimization for Qwen3 - Coder: It has customized prompts and function call protocols for the Qwen3 - Coder series of models (such as qwen3 - coder - plus), maximizing its performance in Agentic Coding tasks.

- Support for Ultra - long Context: Relying on the Qwen3 - Coder model, it natively supports 256K tokens and can be extended to 1 million tokens, suitable for handling medium - to - large - scale projects.

- Open - source and Supports OpenAI SDK Format: It is convenient for developers to call the model through compatible APIs.

- Wide Range of Programming Language Support: The model natively supports up to 358 programming and markup languages.

Disadvantages:

- Token consumption may be relatively fast, especially when using large - parameter models (such as 480B), resulting in higher costs. Users need to pay close attention to usage.

- The understanding and execution of complex tasks may sometimes get into loops or perform worse than top - tier models.

- The understanding accuracy of tool calls may sometimes deviate.

Scope of Capabilities:

Qwen Code CLI is particularly suitable for developers who are interested in or prefer the Qwen model, as well as scenarios that require code understanding, editing, and certain workflow automation. It performs well in agent - based coding and long - context processing.

Usage:

Install via npm: npm install -g @qwen - code/qwen - code. After installation, you need to configure environment variables to point to the Alibaba Cloud DashScope endpoint that is compatible with the OpenAI API and set the corresponding API Key: export OPENAI_API_KEY = "your API key", export OPENAI_BASE_URL = "https://dashscope - intl.aliyuncs.com/compatible - mode/v1", export OPENAI_MODEL = "qwen3 - coder - plus".

🚀 CodeBuddy

CodeBuddy is an AI programming assistant launched by Tencent Cloud. Strictly speaking, it is not just a CLI tool but an AI programming assistant that integrates IDE plugins and other forms. However, its core capabilities overlap and are comparable to CLI tools, and it deeply integrates Tencent's self - developed Hunyuan large model and DeepSeek V3 model.

Advantages:

- Integration of Product, Design, and R & D: It integrates functions such as requirement document generation, design draft to code conversion (such as converting Figma to production - level code with a restoration degree of up to 99.9%), and cloud deployment, achieving end - to - end AI - integrated development from product design to R & D deployment.

- Localization Optimization and Tencent Ecosystem Integration: Optimized specifically for Chinese developers, it provides better Chinese support and deeply integrates Tencent Cloud services (such as CloudBase), supporting one - click deployment.

- Dual - model Driven: It integrates Tencent's Hunyuan large model and DeepSeek V3 model, providing high - precision code suggestions.

- Visual Experience: It provides a Webview function, allowing direct preview of code debugging results within the IDE, with a smooth interactive experience.

Disadvantages:

- The interaction of some functions (such as @ symbol interaction) may need to be further simplified to improve operational convenience.

- The code scanning speed may be slow in large projects.

- The plugin compatibility with editors such as VSCode still needs to be enhanced.

- Currently, an invitation code may be required for use.

Scope of Capabilities:

CodeBuddy is very suitable for developers and enterprises that need full - stack development support, hope for end - to - end AI assistance from design to deployment, and are deeply integrated into the Tencent Cloud ecosystem. It is especially suitable for quickly validating MVPs and accelerating product iterations.

Usage:

CodeBuddy is mainly used as an IDE plugin (such as the VS Code plugin), and it can also run in an independent IDE. Usually, users need to install the plugin and log in to their Tencent Cloud account to start experiencing features like code completion and the Craft mode.

In general, Claude Code CLI, Gemini CLI, Qwen Code CLI, and CodeBuddy each have their own focuses and are actively exploring how to better assist and transform the programming workflow with natural language. The choice depends on your specific needs, technology stack, budget, and preferences for different ecosystems. Understanding their technical principles and challenges can also help us view and apply these powerful tools more rationally, making AI a truly capable assistant in the development process. CodeBuddy is mainly used as an IDE plugin (such as the VS Code plugin) and can also run in an independent IDE. Users usually need to install the plugin and log in to their Tencent Cloud account to start experiencing features such as code completion and the Craft mode.In general, Claude Code CLI, Gemini CLI, Qwen Code CLI, and CodeBuddy each have their own focuses and are actively exploring how to better assist and transform the programming workflow with natural language. The choice depends on your specific needs, technology stack, budget, and preferences for different ecosystems. Understanding their technical principles and challenges can also help us view and apply these powerful tools more rationally, making AI a truly capable assistant in the development process. CodeBuddy is mainly used as an IDE plugin (such as the VS Code plugin) and can also run in an independent IDE. Users usually need to install the plugin and log in to their Tencent Cloud account to start experiencing features such as code completion and the Craft mode.