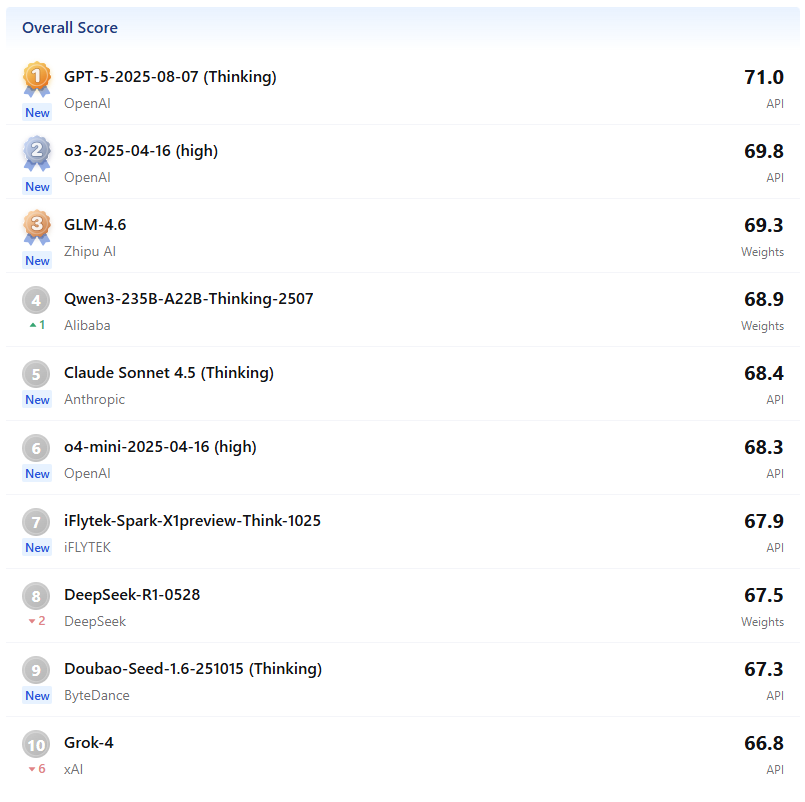

Large Language Model Ranking in December

Based on the official evaluation rules of OpenCompass, leading - industry large - language models are evaluated, and a ranking list is released according to the evaluation results.

Based on the official evaluation rules of OpenCompass, leading - industry large - language models are evaluated, and a ranking list is released according to the evaluation results.

The deep integration of artificial intelligence and search engine optimization (SEO) is reshaping the digital marketing ecosystem. In 2025, AI SEO (Artificial Intelligence Search Engine Optimization) has shifted from technical experimentation to commercial implementation. Its core value lies in redefining the efficiency boundaries, optimizing the user experience, driving data - driven decision - making, and promoting the upgrade of SEO from "keyword competition" to "intelligent trust - building" [1]. AI SEO is not simply "using AI tools for SEO", but refers to a paradigm that fundamentally reconstructs SEO strategies, technologies, and content creation in the context where artificial intelligence (especially large - language models and generative AI) has become the core driving force of search engines.

AI SEO is not just about optimizing keywords; it's about making a brand the preferred source of AI answers. According to the latest data, in 2025, the number of global AI search users exceeded 1.98 billion, with an annual growth rate as high as 538.7%! This means that if you still adhere to traditional SEO thinking, you may be phased out by the AI search wave. As a marketing director put it, "In the AI era, it's not about 'you being found', but 'AI choosing you as the answer'."

The objective of this article is to explore how artificial intelligence is reshaping the underlying logic, working methods, and value standards of the search - engine - optimization industry.

Machine Learning (ML) and Neural Networks: Through Recurrent Neural Networks (RNN), Transformer architectures (such as GPT, BART), sequence data analysis and content generation are achieved, supporting keyword prediction and semantic understanding [2]

[3][4].

Natural Language Processing (NLP): Combining semantic analysis, intent recognition, and entity - relationship extraction technologies to address the contextualized needs of user queries [5][6][7][27].

Large Language Models (LLMs): Represented by GPT series, BERT, T5, pre - trained on hundreds of billions of corpora, to achieve keyword clustering, content creation, and conversational query optimization [8][9][10].

AI systems optimize keyword strategies in the following ways:

Competitive Gap Analysis: Using Support Vector Machines (SVM) and decision - tree algorithms to scan the keyword matrix of competitors, identifying high - potential long - tail keywords [11][12][13].

Intent Prediction Model: Based on Bayesian classifiers and K - Nearest Neighbor algorithms (KNN) to analyze search patterns, automatically labeling informational, navigational, and transactional intents [14].

Real - time Trend Tracking: Capturing sudden keywords through time - series analysis and dynamically adjusting the content direction [15][16].

Generative AI Architecture: Adopting the Encoder - Decoder framework to achieve "text - to - text" conversion, supporting multi - format content output [17][18].

Quality Control Mechanism: Integrating detection tools such as GLTR and Originality.AI, evaluating text originality through the Perplexity value [19].

Multi - modal Expansion: Combining visual - search optimization (such as Pinterest Lens) and voice - content adaptation to enhance omnichannel coverage.

3.1.1 The Complete Definition and Core Connotation of EEAT。EEAT is an abbreviation of four English words, originating from Google's "Search Quality Evaluator Guidelines". In Chinese, it can be translated as "Experience, Expertise, Authoritativeness, Trustworthiness", and each dimension has clear evaluation criteria:

| Abbreviation | English Full Name | Chinese Meaning | Core Evaluation Points |

| E1 | Experience | Experience | Whether the content creator has first - hand / personal experience and whether the content is produced based on actual experience |

| E2 | Expertise | Expertise | Whether the creator has the knowledge, skills, or professional background in this field, and whether the content is accurate and in - depth |

| A | Authoritativene ss | Authoritativeness | Whether the creator / website is recognized by the industry, users, or third parties in this field, and whether there is endorsement |

| T | Trustworthiness | Trustworthiness | Whether the content is true and transparent, whether the information source is reliable, and whether there is no misguidance |

Optimizing "Experience": Highlight personal experiences. For example, add "practical steps", "pit - falling records", and "personal feelings" to the content, and attach evidence: such as attaching operation screenshots for tutorial - type content and real data for case sharing.

Optimizing "Expertise": Strengthen professional depth. Display the author's qualifications: add "Author: A senior expert in the XX industry for 10 years" at the bottom of the article.

Optimizing "Authoritativeness": Accumulate external endorsements. Apply for industry certifications; invite industry authorities to contribute / endorse; obtain coverage from authoritative media; accumulate high - quality external links.

Optimizing "Trustworthiness": Build transparent trust. Outdated information reduces credibility. Continuously update the content: mark "Updated in October 2025" to let AI know the content is new.

Google's core mission is to "provide users with the most relevant and valuable information", and EEAT is the core standard for measuring "value" and "reliability":

Direct impact on ranking: Under the same topic, pages with a high EEAT score (such as professional content released by authoritative institutions) will rank higher than pages with a low EEAT score (such as general remarks by unqualified individuals).

Enhance user conversion: Content with high EEAT can build user trust.

Resist algorithm fluctuations: Google frequently updates its algorithms (such as core algorithm updates), but content with "high quality and high trustworthiness" is always algorithm - friendly. Optimizing EEAT can make a website's ranking more stable and less likely to plummet due to algorithm adjustments.

Enhance User Conversion: High - EEAT content can build user trust.

Resist Algorithm Fluctuations: Google frequently updates its algorithms (such as core algorithm updates), but content with "high quality and high trustworthiness" is always algorithm - friendly. Optimizing EEAT can make a website's ranking more stable and less likely to plummet due to algorithm adjustments.

Don't just focus on big keywords; long - tail keywords are the key to AI SEO!

Question - type Long - Tail: For example, "How to choose a foundation for sensitive skin" (10 times better than "foundation"!).

Regional Long - Tail: For example, "Gyms in Chaoyang District, Beijing, that are super suitable for students".

Model and Specification Long - Tail: For example, "2025 New iPhone 16 Pro Max 512GB".

AI likes content with a clear structure and easy - to - extract information:

Use the Q&A Form: Create an FAQ section. For example, "Q: What foundation is suitable for sensitive skin? A: It is recommended to choose a formula without fragrance and with low irritation...".

Use More Lists and Tables: For example, "3 Golden Rules for Choosing a Foundation".

Have Clear Headings: The H1 tag contains the core keyword, and H2 tags use long - tail keywords.

For example, an article titled "How to Bake Bread" with clear steps and an FAQ section answering common questions is more likely to be cited by AI than an ordinary article.

Many people use AI to generate content, but note:

Rewrite Before Using: Don't directly copy the content generated by AI. Add your own insights instead of copying directly.

Start with Small Keywords, Then Move to Big Ones: Start with long - tail keywords and gradually expand.

Combine Batch Generation with High - Quality Content: Don't just focus on batch - generated content; ensure quality.

Background: A project - management software company with the target keyword "AI project management", facing fierce competition.

Implementation Strategies

Semantic clustering: Cluster 200 long - tail keywords into 8 themes, and create pages along with 30 cluster articles.

E - E - A - T Enhancement: Each article contains a CTO expert review box, customer case videos, and third - party security certification Schema.

Predictive Caching: For high - value white - paper pages, AI pre - loading reduced the Largest Contentful Paint (LCP) time from 3.2s to 1.4s.

Results

The ranking of the target keyword rose from 15th to 3rd.

Marketing - Qualified Leads (MQL) increased by 150%, and the Cost per Lead (CPL) decreased by 40%.

The excellent rate of Core Web Vitals increased from 62% to 94%.

A long - form content generator based on the GPT - 4 architecture, supporting brand - tone customization and real - time integration with Surfer SEO, achieving "optimization upon generation" [21][22].

AI Technology Stack:

Jasper AI + Clearscope + Zapier automated workflow

Implementation Strategies:Identified 100 micro - themes related to "community management", and AI batch - generated 700 SEO - optimized pages, including term explanations, tool comparisons, and best practices [23].

Each page automatically embedded internal links to build a topic cluster, enhancing the website's authority.

AI monitored page performance and automatically rewrote or merged pages with traffic less than 100 within 3 months.

Automatically embed internal links in each page to build a Topic Cluster and enhance the website's authority. The AI monitors the page performance and automatically rewrites or merges pages with traffic less than 100 within three months.

Key KPIs:

Traffic Growth: Organic traffic increased by 300% within 6 months.

Keyword Coverage: The number of long - tail keyword rankings increased from 500 to 4,200.

Conversion Effect: MQL increased by 180%, and the customer acquisition cost decreased by 40%.

Success Points:

Success Points:B2B SaaS achieved "full coverage of long - tail keywords" through AI, solving the pain point that traditional content teams cannot scale to cover niche demands.

AI Technology Stack:

Google Cloud AI + Custom Ranking Algorithm + Shopify Integration [24]

Implementation Strategies:

AI analyzed user search behavior and purchase data to dynamically generate "scenario - based" product collection pages, such as "Spring wedding outfits" and "Office casual style".

Each collection page had a unique SEO title and description generated by AI to avoid duplicate - content penalties.

Used AI to predict seasonal trends and laid out keywords like "2025 Autumn new products" 60 days in advance.

Key KPIs:

Revenue Growth: Return on Advertising Spend (ROAS) increased significantly.

Organic Traffic: The proportion of organic traffic increased from 35% to 52%.

Conversion Rate: The conversion rate of collection pages was 45% higher than that of standard product pages.

Success Points:

E - commerce SEO upgraded from "single - product optimization" to "scenario - based theme optimization", and AI achieved "user - demand prediction + dynamic page generation".

成”。

4.6 Staples (Office Supplies) - AI Voice - Search Optimization

Google Assistant Optimization + Schema Markup Automation + Ahrefs Monitoring [25]

Implementation Strategies:

AI analyzed voice - search queries (usually longer and more conversational) and optimized the FAQ page to directly answer questions like "Where can I buy cheap A4 paper?".

.

Added "HowTo" and "FAQ" structured data to all product pages to increase the recommendation rate of voice assistants.

Used AI to generate natural - language answers, ensuring an average length of 29 words (the optimal length for voice search).

Key KPIs:

Voice Traffic: Traffic from voice search increased by 200%.

Featured Snippet: The proportion of winning Google Featured Snippet increased from 3% to 18%.

Local conversion: In - store sales driven by queries related to "nearby stores" increased by 85%.

Success Points:

Success Points:Pre - arranged "Position Zero" optimization in advance, and AI helped understand the nuances of natural - language queries.

AI Technology Stack:

GPT - 4 + SEMrush + Custom Attribution Model

Implementation Strategies:

For the combinations of "cloud computing + industry" (such as "cloud computing in healthcare", "cloud computing in finance"), AI generated 150 in - depth solution pages.

Each page embedded an ROI calculator. After users entered parameters, AI generated customized reports to collect sales leads.

Used AI to analyze user - behavior paths, identified high - conversion - intent pages, and focused on external - link building.

Key KPIs:

Traffic and Conversion: Organic traffic increased by 40%, and the conversion rate increased by 20%.

Lead Quality: The proportion of Sales - Qualified Leads (SQL) increased from 12% to 28%.

Return on Investment: The SEO ROI reached 6.8:1, far exceeding the 2.1:1 of paid search.

Success Points:

The ultimate goal of B2B SEO is "customer acquisition" rather than "traffic", and AI achieved a closed - loop of "content → tools → leads" [26].

AI SEO is not an option but a battleground in digital marketing. From "keyword ranking" to "answer control", from "user - initiated search" to "AI - initiated recommendation", from "traffic competition" to "trust accumulation", AI SEO is reconstructing the entire marketing ecosystem.

【1】 https://m.163.com/dy/article/K919T28O05564VL8.html

【2】https://doi.org/10.3115/v1/D14-1179

【3】https://www.irjet.net/archives/V12/i2/IRJET-V12I272.pdf

【4】https://doi.org/10.18653/v1/2020.acl-main.703

【5】https://oneclickcopy.com/blog/ai-keywords-how-artificial-intelligence-is-revolutionizing-seo

【6】https://www.millionairium.com/Lead-Generation-Articles/ai-and-seo-benefits-and-limitations/

【7】https://blog.csdn.net/ywxs5787/article/details/151409595

【8】https://www.preprints.org/frontend/manuscript/b16913032bd1606d0a411cbe98d08210/download_pub

【9】https://aircconline.com/csit/papers/vol14/csit142005.pdf

【10】https://www.irjet.net/archives/V12/i2/IRJET-V12I272.pdf

【11】 https://www.genrise.ai/_files/ugd/f60dd5_a18ac8fb9e8b4772ae3508982c1d19b1.pdf?index=true

【12】https://ijisrt.com/assets/upload/files/IJISRT23NOV1893.pdf

【13】https://www.supremeopti.com/wp-content/uploads/2024/12/Ultimate-SEO-Ebook_Supreme-Optimization.pdf

【14】https://ijisrt.com/assets/upload/files/IJISRT23NOV1893.pdf

【15】https://www.preprints.org/frontend/manuscript/b16913032bd1606d0a411cbe98d08210/download_pub

【16】https://aircconline.com/csit/papers/vol14/csit142005.pdf

【17】https://new.qq.com/rain/a/20230417A03YX200

【18】https://juejin.cn/post/7449761613269336114

【19】https://www.aibase.com/zh/tool/21603

【20】https://aiclicks.io/blog/best-ai-seo-tools

【21】 https://www.ranktracker.com/blog/jasper-ai-seo/

【22】https://www.ranktracker.com/zh/blog/jasper-ai-seo/

【23】https://winningbydesign.com/wp-content/uploads/2025/05/WbD-Internal-AI-Story-Library-Slide-Outlines-2.pdf

【24】https://amandaai.com/wp-content/uploads/2023/01/gina-tricot.pdf

【25】https://madcashcentral.com/utilizing-ai-powered-seo-strategies-for-effective-site-promotion/

【26】https://optimizationai.com/programmatic-ai-seo-content-case-studies/

【27】https://saleshive.com/blog/ai-tools-seo-best-practices-results/#

On December 12th, React officially confirmed that researchers, while validating last week's patches, unexpectedly discovered two new vulnerabilities in React Server Components (RSC).

In the past week, the aftereffects of the React2Shell vulnerability still lingered: servers were hijacked for cryptocurrency mining, cloud vendors imposed emergency bans, and even more consequences ensued. To mitigate the risks, Vercel even spent $750,000 on vulnerability bounties and emergency response costs in just one weekend. A vulnerability in a front - end framework directly penetrated the entire technology stack. React's official team has continuously issued emergency announcements, repeatedly emphasizing "please upgrade immediately." This is already the second large - scale patch update in a short period.

The two vulnerabilities disclosed this time are: the high - risk DoS (Denial - of - Service) CVE - 2025 - 55184, where a single request can cause the server to crash; and the medium - risk source code leak CVE - 2025 - 55183, which may expose the source code of React Server Components.

1 One React Vulnerability Shakes the Global Web

In the past week, a vulnerability known as React2Shell has swept through the entire Internet industry. The fundamental reason for such a significant shock is simple: React is of utmost importance. It is almost the "default foundation" of modern web.

From Meta's own Facebook and Instagram to large - scale platforms such as Netflix, Airbnb, Shopify, Walmart, and Asana, all rely on it. Not to mention the millions - strong developer ecosystem, and many frameworks depend on the vulnerable React package.

The React team numbered it as CVE - 2025 - 55182, which received a perfect severity rating of 10.0 in the Common Vulnerability Scoring System. As the creator and main maintainer of Next.js, Vercel also assigned a separate CVE number, CVE - 2025 - 66478, to this issue.

What makes it terrifying is that attackers can exploit this vulnerability with almost no pre - conditions. Cloud security vendor Wiz observed that 39% of cloud environments contain Next.js or React instances with the CVE - 2025 - 55182 vulnerability. It is estimated that when the leak occurred, over two million servers had security vulnerabilities. Even worse, in their experimental verification, they found that the exploitation of this vulnerability is "almost 100% successful" and can stably achieve complete remote code execution.

The affected component scope includes versions 19.0 to 19.2.0 of core modules such as react - server - dom - webpack, and also affects the default configurations of multiple React frameworks and packagers, such as Next.js, React Router, Vite RSC, etc. For many frameworks (especially Next.js with App Router), RSC is actually enabled by default.

When a Level 10 vulnerability is made public, it's not just about "the vulnerability being reported and fixed." There are real - world destructive impacts.

Many developers publicly shared their experiences of being affected on X. Developer Eduardo was one of them. After his server was blocked, he immediately checked the logs and found that the machine had long been "taken over" - the CPU soared to 361%, suspicious processes were frantically consuming resources, and continuous connections were being made to an IP in the Netherlands. "My server is no longer running my application. It's mining for someone else!"

Even worse, the intrusion didn't occur through SSH brute - force cracking but inside the Next.js container. After exploiting the vulnerability, attackers could execute any code they wanted on the server, then deploy more "professional" malicious programs, and even disguise the processes as web services like nginxs or apaches to reduce the risk of exposure. "It infected my entire server just through one Next.js Docker container!"

Finally, he warned, "If Docker is still running with ROOT privileges and the exploited React version hasn't been updated, you'll be hacked soon." (Because with ROOT privileges, one can install cron, systemd, and persistent scripts, ensuring their presence even after a restart.)

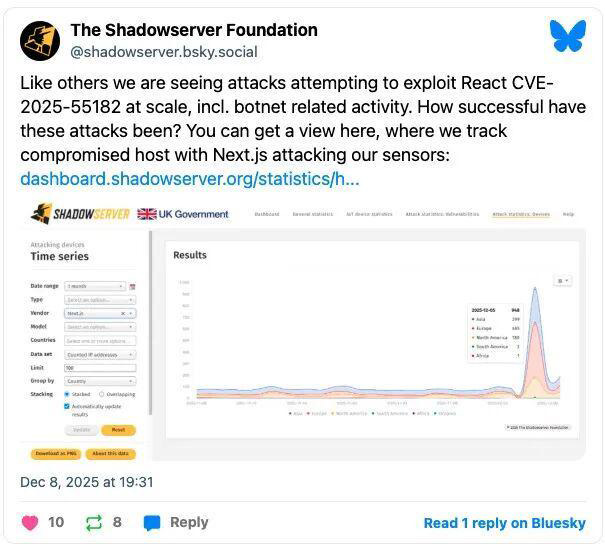

The non - profit security organization ShadowServer Foundation stated that since the disclosure of the vulnerability, the attack traffic from Next.js assets controlled by botnets has suddenly increased tenfold. "Like other institutions, we've also observed large - scale attempts to exploit React's CVE - 2025 - 55182, including activities related to botnets."

Why It Can Be Fixed with (Almost) "One Line of Code"

Security researcher Lachlan Davidson was the first to disclose this issue and released a detailed technical analysis. He described the vulnerability as "a serious lack of security checks intertwined with a highly creative exploitation mechanism."

The research process itself was also extremely challenging. It was revealed that he invested over 100 hours in this. And the independent researcher Maple, who was the first to publicly reproduce the attack code, successfully constructed a minimum viable attack chain within dozens of hours after the patch was made public, demonstrating the risk that the vulnerability could be quickly weaponized.

Simply put, this vulnerability doesn't lie in some "obscure edge feature" but in the core communication mechanism of React Server Components.

To make server components fast enough, React designed the Flight protocol. You can think of it as a set of "front - end - specific data channels" built into React. Instead of sending the complete page data to the browser all at once, the server sends data in batches according to the rendering tree structure. This way, the interface can first render the parts that can be rendered, and the rest will be filled in gradually.

The problem is that this ability is very powerful. The Flight protocol not only needs to transmit strings, numbers, and JSON data but also "incomplete things," such as intermediate states like Promise, and reconstruct the component tree. To achieve this, React needs to deserialize and interpret the content of requests sent from the client on the server - side, restoring them into objects that can be further executed.

This is where the vulnerability lies. Attackers can forge a special HTTP request and send content "that looks like normal Flight data" to any React Server Function endpoint. When React parses this data, it mistakes them for legitimate internal objects and continues processing according to the normal process. As a result, the data constructed by the attacker is treated as part of the code execution path, ultimately directly triggering remote code execution on the server.

The entire process doesn't require logging in, no credentials are needed, and there's no need to bypass traditional security boundaries. Just because React lacks a basic hasOwnProperty check in its internal serialization structure, the crucial runtime boundary is breached.

After Lachlan Davidson responsibly reported the vulnerability to Meta, Meta immediately collaborated with the React team and launched an emergency patch in just four days. In terms of implementation, it's almost "adding one line of code," yet it blocks an attack chain capable of destroying the server.

2.Vercel, Cloudflare, etc. Are Innocently Caught in the Crossfire

As soon as a Level 10 vulnerability is exposed, it's often not a small team that gets hit first but an entire industry chain relying on React, especially front - end hosting and Serverless platforms. Leading platforms represented by Vercel are almost inevitably at the center of the storm because they are not only the key maintainers of Next.js but also the default entry points for a vast number of applications.

During the emergency response phase, various vendors indeed rolled out WAFs (Web Application Firewalls) at the first moment. Companies like Vercel, Cloudflare, AWS, Akamai, and Fastly all deployed rules to intercept known React2Shell exploit payload patterns. This can indeed buy some time, but the problem is that WAFs can only serve as a buffer, not a solution.

The essence of WAFs is a rule - matching pattern. Attackers can completely adjust the form of the payload to bypass it. Many applications don't rely on these service providers at all. Self - hosted, privately - deployed, or publicly - running instances are even beyond the reach of WAFs. More importantly, mitigation measures on the edge side are always just one layer of a multi - layer defense, not your patching strategy. For a 10/10 - level RCE (Remote Code Execution), the only real fix is to upgrade React/Next and redeploy, completely removing the vulnerable code from the running environment.

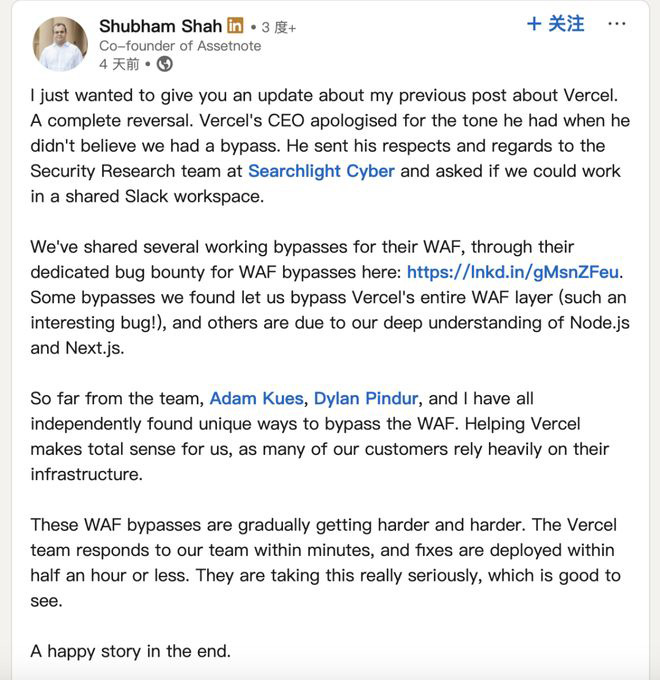

Precisely because the statement "don't rely on WAF as the main repair method" hit a sore spot, there was quite a bit of contention in the industry. Shubham Shah, the co - founder of Assetnote, posted on LinkedIn accusing the Vercel CEO of asking him to remove the tweet about "not relying on WAF to protect against this vulnerability" in an almost bullying manner. Shubham Shah said:

"The Vercel CEO tried to deny the fact that their WAF could be bypassed. The vulnerability involves the latest Next.js/RSC remote code execution. He asked me to remove the tweet about 'not relying on WAF to protect against this vulnerability' in an almost bullying manner. My advice at that time was that users should directly patch their own systems instead of relying on WAF - because we could already bypass Cloudflare's protection at that time, and now Vercel's WAF can also be bypassed. This advice still holds true today.

WAFs do have their role, but the core solution is always to fix system vulnerabilities. Currently, many users have difficulty identifying the risk points in their own systems, and defenders need clear information to guide the patching work. WAF vendors like Vercel should not pressure researchers to cover up the fact that their WAF can be bypassed.

I just released an update for the react2shell - scanner tool, adding the --vercel - waf - bypass parameter. This feature is based on the attack payload designed by Adam Kues from the Searchlight Cybersecurity Research Team and can effectively bypass Vercel's WAF protection."

Trying to cover up problems is always in vain. As more people discovered Vercel's vulnerability, Vercel's attitude underwent a major change. The Vercel CEO has apologized for his previous attitude of questioning whether the WAF could be bypassed and expressed his respect to the Searchlight Cybersecurity Research Team.

The Vercel team responded to Shubham Shah's team's report within a few minutes and deployed a fix within half an hour. Shubham Shah stated in his latest LinkedIn post:

"The Vercel CEO has apologized for his previous attitude when questioning whether the WAF could be bypassed and expressed his respect to the Searchlight Cybersecurity Research Team. He also invited us to collaborate in a shared Slack workspace.

We have submitted multiple valid WAF - bypass solutions through their special vulnerability bounty program

https://lnkd.in/gMsnZFeu

Some of these vulnerabilities allowed us to completely bypass Vercel's WAF protection layer (these vulnerabilities are very interesting!), and others benefited from our in - depth understanding of Node.js and Next.js.

So far, Adam Kues, Dylan Pindur, and I in the team have independently discovered different bypass methods. Assisting Vercel is crucial to us because many of our clients deeply rely on its infrastructure. The difficulty of bypassing the WAF is gradually increasing. The Vercel team can respond to our reports within a few minutes and deploy a fix within half an hour. Their attention to this matter is reassuring. In the end, it turned out to be a happy ending."

Under the dual pressure of the new vulnerability and the React Level 10 vulnerability, Vercel temporarily launched what could be called the most radical security patching plan in history.

On December 11th, on YouTube, a program called "The Programming Podcast" analyzed how Vercel spent $750,000 in just one weekend to stop this "perfect hacker" attack, as well as the line of code in the Dockerfile that could expose users' environments.

This podcast mentioned that after the incident was exposed, Vercel quickly launched an emergency response process, collaborating with the React team, the HackerOne community, and security researchers. They completed the investigation and repair in just one weekend and paid a total of $750,000 in vulnerability bounties. This handling speed and transparency were praised by the industry as "a highly exemplary public relations and technical response."

The reason why the incident didn't cause more extensive damage lies in the rapid response of the community and the platform. After the vulnerability was made public, Vercel collaborated with HackerOne to open up all relevant vulnerabilities and boundary conditions to the white - hat community. Within three days and nights, they received 17 to 19 repair suggestions and boundary situations, involving different levels of security risks. Eventually, Vercel paid approximately $750,000 in bounties to reward the developers and security researchers who participated in the repair at a crucial moment. Multiple teams of engineers, including those from React, Next.js, etc., were also fully engaged over the weekend to promote the rapid implementation of the patches.

Due to the extremely wide user base of React, besides Vercel being severely affected, Cloudflare was also thrown into chaos for a while.

In an attempt to remedy the impact of the React2Shell vulnerability, Cloudflare hastily introduced a change, which affected approximately 28% of HTTP traffic. A large number of websites relying on Cloudflare returned a 500 Internal Server Error, causing about a quarter of Internet traffic to be inaccessible for a time.

Dane Knecht, the Chief Technology Officer of Cloudflare, later stated that this incident was not caused by a cyber - attack but by an internal change introduced when the company was hastily dealing with the high - risk vulnerability in React Server Components.

In addition to these platforms, the National Cyber Security Center (CSOC) of NHS England also said on Thursday that there are already multiple functional proof - of - concept vulnerability exploitation programs for CVE - 2025 - 55182 and warned that "the possibility of continued successful exploitation of this vulnerability in a real - world environment is very high."

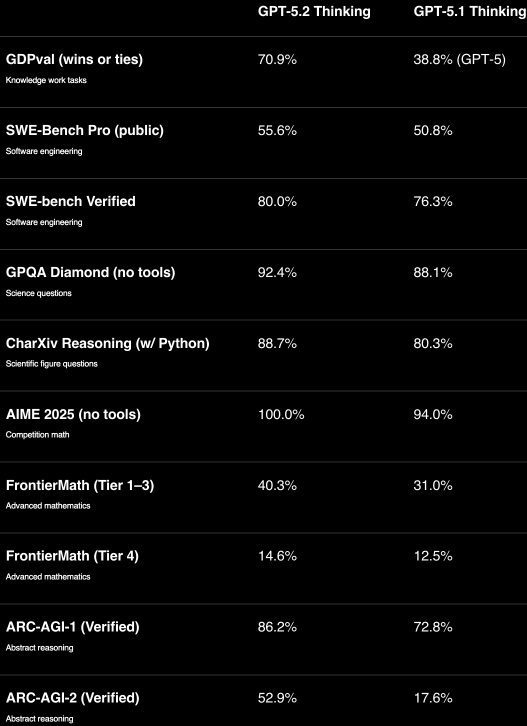

Guys, who can relate! Tasks like making spreadsheets, writing code, and processing long texts in daily work are just a headache 😩. Every time I encounter a complex problem, I wish I had a super assistant to help. Well, GPT - 5.2 released by OpenAI has become my savior 🌟!

GPT - 5.2 is positioned as "the most suitable model for daily professional use". After months of research and development, it aims to create more economic value for us. Compared with the previous GPT - 5.1, it has significantly improved in tasks such as creating spreadsheets and building presentations. Just like that immunology researcher who used GPT - 5.2 Pro to generate key questions about the immune system, the depth and persuasiveness were excellent 👍.

Moreover, OpenAI has made remarkable improvements in "AI agent workflows", aiming to make ChatGPT a more powerful personalized assistant. Many companies like Notion, Shopify, etc. have already obtained the testing permission in advance. GPT - 5.2 pays more attention to practicality and structured output, and the interactive experience is also great.

Now it will be gradually launched on ChatGPT, first available to paid users. GPT - 5.1 will be taken offline in about three months. OpenAI will also deploy it "progressively" to ensure our experience. Guys, with such an amazing new model, start looking forward to it right away 💗!

#GPT - 5.2 #OpenAI #AI Assistant #Office Efficiency Improvement #New Model Release

Hey guys 👋, on November 29, 2025, the 2025 China Artificial Intelligence Conference and the Annual Meeting of Deans (Department Heads) of National Artificial Intelligence Institutes kicked off in Beijing 🔥!

At the conference, the "White Paper on Beijing's Artificial Intelligence Industry (2025)" was released. This white paper is really informative ✨! It points out that in 2025, global artificial intelligence has evolved from single - point technological breakthroughs to ecological collaborative innovation. The development achievements of Beijing's artificial intelligence industry are remarkable 👏.

The industrial scale has seen a rise in both quantity and quality. In the first half of 2025, the core industrial scale reached 215.22 billion yuan, with a year - on - year growth of 25.3%. It is expected to exceed 450 billion yuan for the whole year! There are over 2,500 AI enterprises, and 183 large - scale models have been filed. Moreover, universities, research institutes, new - type research and development institutions, and core enterprises have produced numerous cutting - edge results. The policy system is rich, the industrial ecosystem is full of vitality, investment and financing are active, and the enthusiasm for international cooperation has also increased.

In the future, artificial intelligence will drive the transformation of productivity, expand the boundaries of cognition, and promote the popularization of technology. Beijing's position in the field of artificial intelligence is rock - solid 💯! Let's pay attention to this promising industry together. Maybe we can seize new opportunities 😎!

#Beijing Artificial Intelligence #Industrial White Paper #Core Output Value #AI Development #Cutting - edge Technology

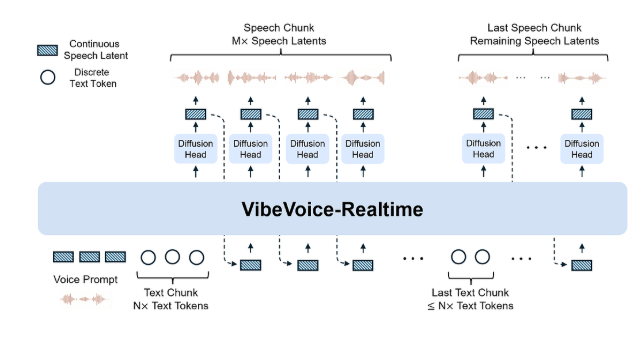

Guys, today I must share with you Microsoft's open - source real - time speech model VibeVoice - Realtime - 0.5B 👏!

Previously, when using traditional TTS models, the startup time was often 1 - 3 seconds. That kind of lag really affected the experience 😫. This was the pain point in our use of speech models. However, VibeVoice - Realtime - 0.5B perfectly solves this problem. On average, it only takes 300 milliseconds from text input to sound output, almost with zero delay. It's just like having a conversation with a real person. As soon as you type, the other side starts to respond. It's extremely smooth 💯.

Its capabilities don't stop there! It can generate an ultra - long audio of up to 90 minutes at one time, and the whole process is smooth and natural, just like a professional broadcaster reading. Moreover, it natively supports up to 4 characters to have a conversation simultaneously, with smooth emotion transitions. The built - in emotion perception module can automatically recognize emotions without manual annotation, and it's ready to use as soon as you get it 👍.

I tried it myself. I used it on HuggingFace to read the first chapter of "The Three - Body Problem". There was no voice break, and the effect was excellent. Its English performance is close to the commercial level, and it's also very good in Chinese. Although there is still room for improvement in the handling of some polyphonic characters and neutral tones, the official will release a fine - tuned version. With its lightweight design, it can run at real - time speed on an ordinary laptop and can already be integrated into many tools.

Currently, this model has been completely open - sourced and supports commercial use. There are also many interesting demos in the community. Guys, don't miss it. Hurry up and give it a try 👇!

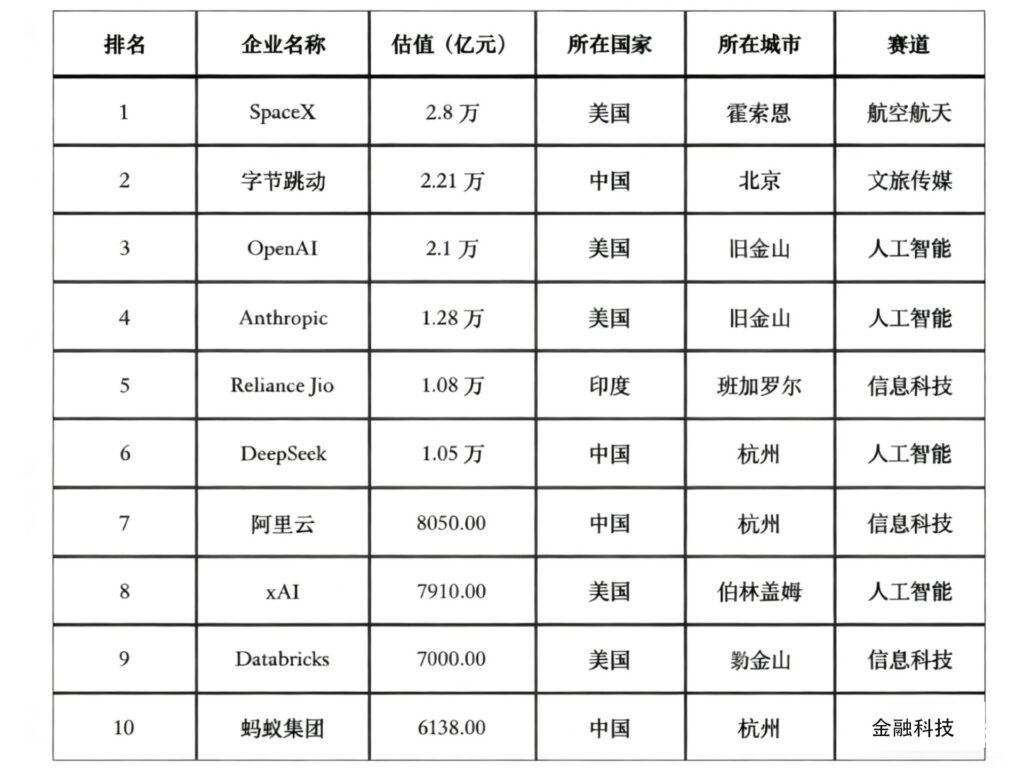

Dear friends, the Global Unicorn 500 in 2025 has been revealed! As soon as I heard the news, I rushed to share it with you all!

This conference was held in Laoshan District, Qingdao, Shandong Province. The released "Report on the Global Unicorn 500 in 2025" is of great value. In 2025, the total valuation of global unicorn companies reached 39.14 trillion yuan, an increase of 30.71% compared with last year. This figure is astonishing, even exceeding Germany's GDP 💯. However, the market environment is a bit tough. Only 12 unicorns successfully went public, but merger and acquisition activities increased significantly.

In terms of enterprise distribution, China and the United States are absolute "kings", accounting for 74.8% of the number of enterprises and 86.8% of the total valuation. The United States has strong innovation capabilities in fields such as artificial intelligence, while China has an excellent performance in advanced manufacturing and automotive technology. The number of enterprises in the advanced manufacturing field in China is more than six times that of the United States, and the total valuation is nearly 2 trillion yuan higher 👏.

In terms of urban distribution, the head - clustering effect is obvious. The top ten cities have gathered more than half of the unicorn enterprises. This also shows the important position of these cities in the global innovation ecosystem.

I have to say that these unicorn enterprises are really amazing! Let's all feel the power of this innovation together 🤩.

#The Global Unicorn 500 in 2025 #Chinese and American Enterprises #Unicorn Enterprises #Advanced Manufacturing #Innovation Ecosystem

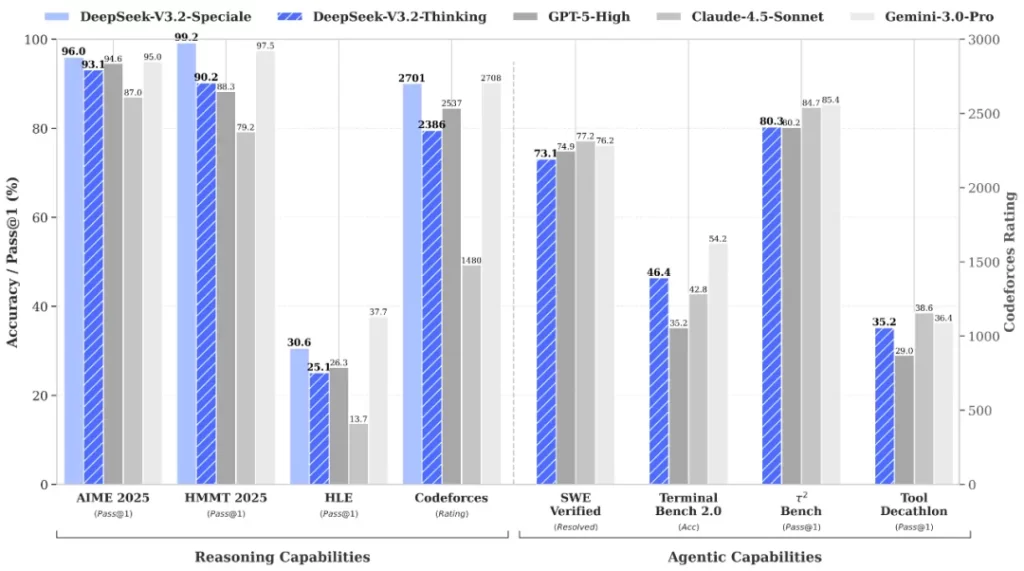

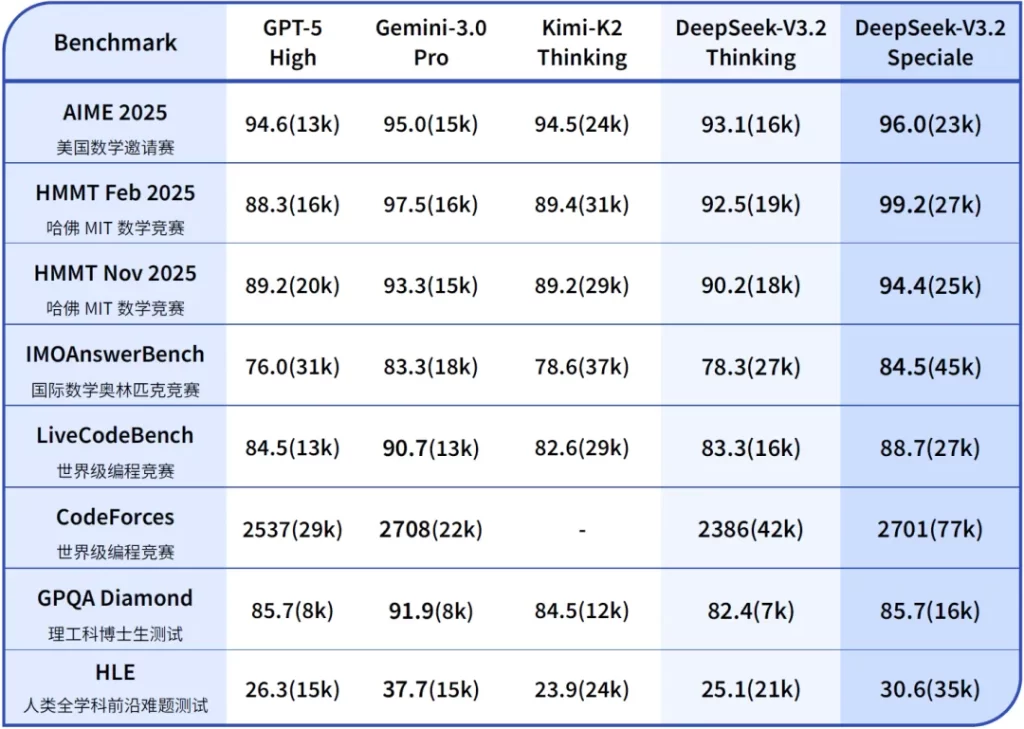

Dear friends! DeepSeek has made another big move! 🎉Today, two official - version models, DeepSeek - V3.2 and V3.2 - Speciale, are released simultaneously. This time, it has pushed the reasoning ability to a new height and also solved the major pain point of Agent tool - calling!

🌟 Explosive Reasoning Ability

The ordinary version of V3.2 balances reasoning and efficiency. In public tests, it reaches the level of GPT - 5, only slightly lower than Gemini - 3.0 - Pro. The Speciale version is even more powerful! 🐂It is an enhanced version for long - term thinking plus the ability to prove mathematical theorems. It has directly won four gold medals in IMO/CMO/ICPC/IOI, and its ICPC result is even better than the second - ranked human!

🤖 Upgraded Agent Ability

This is DeepSeek's first model with "thinking + tool - calling"! It proposes a large - scale Agent training data synthesis method, supports tool - calling in the thinking mode, and has full generalization ability. It has won the first place among open - source models in the agent evaluation, greatly narrowing the gap with closed - source models.

🔧 Two Versions for Your Choice

💻 Open - source and Eco - friendly

The model has been open - sourced on HuggingFace and ModelScope. The thinking mode supports Claude Code, and the API has also optimized the tool - calling process (you can continue thinking by sending back the thought chain).

Are there any friends who have used DeepSeek? Come and share your experience in the comment section below 👇

#DeepSeekV3.2 #Open - source AI #The Ceiling of Reasoning Ability #Agent Tool - calling #AI Model Update

#Cutting - edge Technology #A Substitute for GPT5 #ICPC Gold - medal AI #Upgrade of Programming Assistant

Dear friends, there's big news in the AI community today! 🔥Claude Opus4.5 might be officially launched today!

Previously, a new model entry with the code name "Claude Kayak" briefly appeared on the AI benchmark platform Epoch AI, marked with today's release date. Although it was quickly removed, it still attracted high attention from the global AI community. 🤩The industry generally believes that "Claude Kayak" is the flagship model Claude Opus4.5 that Anthropic is about to launch.

As a super - strong version of the Claude4 series, Opus4.5 is expected to significantly improve in complex reasoning, multi - step agent tasks, and code - generation capabilities. It is hoped to break the 80% score in authoritative evaluations, directly competing with OpenAI GPT - 5.1 and Google Gemini3.0Pro. 👏

Since the release of Opus4.1 in August this year, Anthropic has successively launched Sonnet4.5 and Haiku4.5. If Opus4.5 makes its debut as scheduled this time, the entire Claude4 series will be updated, and its position in the fields of multimodality and enterprise - level AI will be more solid. 👍

Now, developers are not only looking forward to the new model bringing stronger agent - coordination capabilities and longer context - handling capabilities but also worried that the high computing power requirements will make it "in limited supply" like the Opus series. Let's all wait for the official news. If it's really released, this will definitely be a major event in the AI competition at the end of 2025!

#ClaudeOpus4.5 #AI Release #GPT - 5.1 #GeminiPro #AI Competition

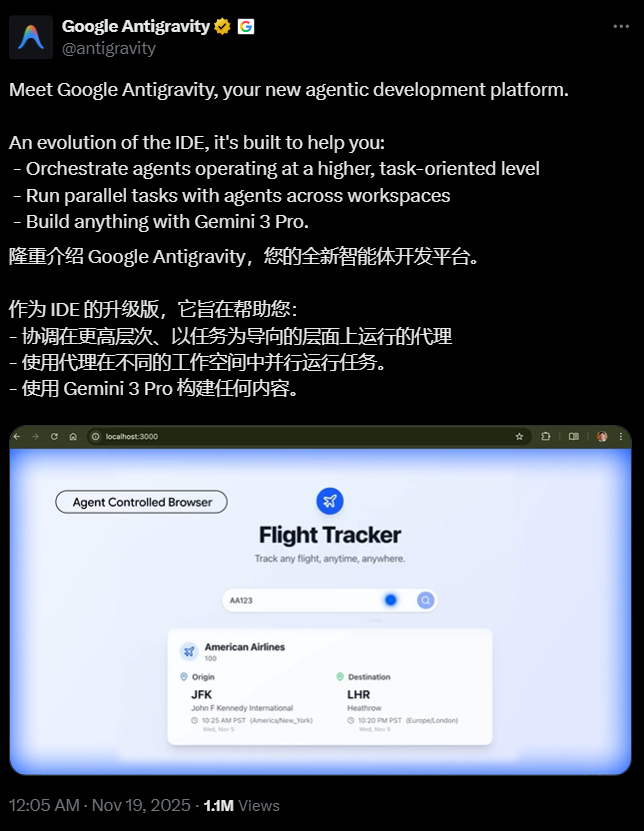

Dear friends, there's a big surprise in the AI development community! 🎉 Not only has Google released the new - generation flagship large - model Gemini3, but it has also launched a brand - new AI - native integrated development environment, Google Antigravity. This is truly set to "revolutionize" the development circle!

This so - called "anti - gravity" agent - based development platform directly upgrades AI from an ordinary code assistant to an extremely powerful "active partner" 🤝. It perfectly addresses the pain points of competitors like Cursor and Claude, liberating developers from tedious low - level coding! Now, Antigravity has opened for public preview, supporting Windows, macOS, and Linux systems. What's more, it's completely free 🆓, and the quota for Gemini3Pro is quite generous. Who wouldn't love this!

🌟 Its awesomeness doesn't stop there!

Antigravity is not only deeply integrated with Gemini3Pro but also supports Claude Sonnet4.5 and OpenAI open - source models. Its future ecological compatibility is surely extremely strong! Now, it can be downloaded and experienced on the official website (antigravity.google). The quota refresh cycle is very user - friendly, and ordinary developers basically won't run out of it.

The AI IDE has officially entered the era of "multi - agent, verifiable, visual feedback". Cursor, Claude Dev, Windsurf, etc. are probably under great pressure 😜. I sincerely suggest that all front - end, full - stack, and AI engineers give it a try as soon as possible. Maybe this will be the most worthy development tool to switch to in 2025!

📱 Download address:https://antigravity.google/download

Have any of you used it, dear friends? Come and share your feelings in the comment section below 👇